Tails is a customer led company and to support that goal we use experiments, user research, and customer interviews to help us figure out what our customers need.

One of the cross functional teams here is called Make Tails Irreplaceable (MTI). Engineering squads are one of the many moving parts of a cross functional team. At the moment, my squad is focused on retaining customers who are early in their trial period.

Armed with oodles of information from our nutrition and data teams, we have been building and testing features that educate customers (e.g. how to transition between dog foods, how to use our digital product) so they are more likely to stick with us and have a happy and healthy dog for life! Ergo making our service irreplaceable.

Smaller, Faster?

We recently had a squad shuffle to better focus on getting features to customers fast. So now my squad is smaller than I'm used to but the results have been surprising. We’re made up of:

- Squad Lead

- 2x Engineer

- QA Engineer

- Product Manager

- Product Designer

Our meetings have shrunk and there’s less engineering firepower but we’ve been able to laser focus on our goal of retaining customers. There’s less context switching — I'm finding that our meetings end sooner and there's less overflow.

We are following the model of the Continuous Discovery Team. Teresa Torres summarises this:

Continuous discovery is: weekly touch points with customers, by the team building the product, where they conduct small research activities, in pursuit of a desired product outcome.

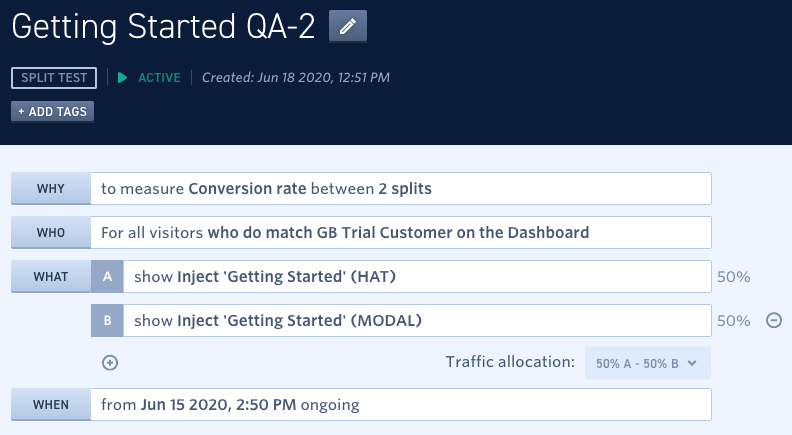

Our aim is to get features out fast and show them to a slice of customers. We judge the success of a feature by the learning we gain from it. Rather than chasing results too early, we want to make sure we build something that is actually useful for customers. We deploy to slices of our customers using A/B tests. We mark customers to show them different website content, or send them different emails and SMSes, and then later on we'll see how this affected retention by comparing them against a control cohort who weren't chosen for the test.

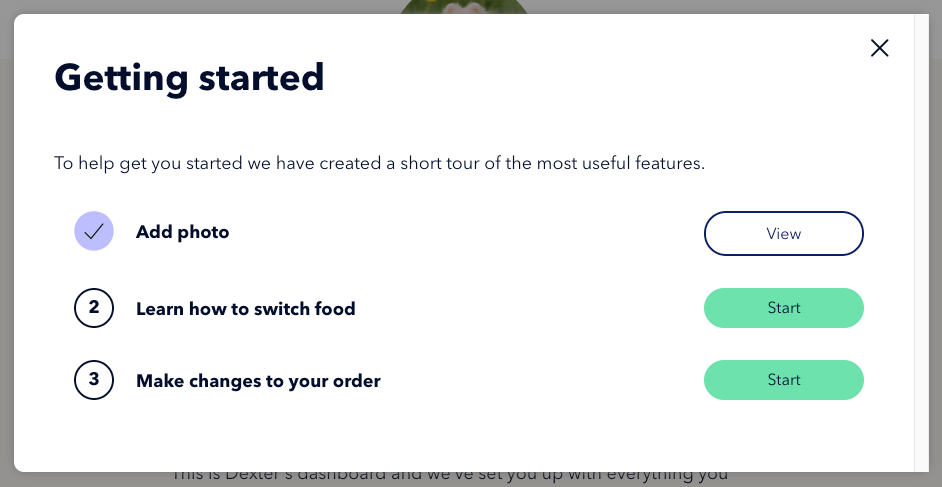

We're also tracking interaction. We built a tutorial space with different steps that a customer can view to learn more about transitioning to Tails food and about the digital service we offer. Each time a customer views a step, we track it. This lets us see which steps are the most popular. As well as being data-driven, we reach out to customers and invite them to an interview with us — some of them have even been in the test slices. It's been exciting for me to listen to direct customer feedback on features I finished coding only weeks ago.

The customer interviews are performed by our product manager and product designer and are live streamed to the engineers. We collect our thoughts in a Slack thread during the interview and bring the information we've learned into our planning and design sessions. Even though I've had a tour of our factory (and inspected the kibble in the factory's quality assurance room) the most important context that I've gained at Tails is listening to customers describe the experience of their food arriving and the first initial feeding.

Testing Frameworks

When deploying A/B tests we need a way of assigning tags to customers and delivering them a different experience. We use two frameworks to do this. Sixpack and Monetate.

Sixpack

Sixpack is a self-hosted A/B testing framework and we use it for complex A/B tests like those requiring different flows, alternate pages, or different emails and SMS messages. It’s integrated in our Flask monolith and allows us to write our tests with code and unit test them.

Our ‘Welcome Email Journey’ starts as soon as a customer is signed up. We’ve been experimenting with different email templates for this flow. Since this affects the customer at the start of their Tails experience, it aligns with my squad's goal of lowering early deactivations. The below code excerpt shows the logic that has a 50% chance of entering a given customer into the A-variant of this test.

def participate_in_welcome_emails_test(customer):

customer_service = CustomerService()

test = ab_tests.find_test(AB_TEST_IDENTIFIER__WELCOME_EMAILS)

# make sure they aren't in either cohort already

if not customer.has_any_tag(TAG_LIST):

# mark them now to deliver a different experience later

customer_service.safe_add_tag(

customer, tag_identifier, tag_description

)After a customer has signed up, we have Celery tasks that are triggered by events. Like when their first shipment is dispatched.

@celery.task(bind=True, queue='email')

def send_shipment_dispatched_email(self, shipment_id):

Within this function, we check to see if a customer is assigned the tag welcome_emails:a-variant. If they are tagged, we send them an alternative template. Later on, our data team will look at retention statistics for customers in both variants of this test. Our cross functional team has a meeting on Friday mornings where we briefly look at overall customer churn curves and how tests and other factors have impacted them.

Monetate

We use Monetate to A/B test features that can be altered in the browser and don’t require any of our backend code to change. Product managers are able to use it to change things like copywriting, logos, and icons. Experiments can be built through a user-interface instead of using code. With both our testing frameworks, any changes are tested by a QA engineer in a lower environment.

My squad realised that we can use Monetate to inject a compiled Vue.js application via a JavaScript snippet. This gave us the velocity we needed to get features out quickly (deployment is instant). We use version control and manually deploy the build. Our QA engineer can run the code locally on their machine but we prefer to run tests in a staging environment. The Vue.js application attaches to an existing element on the page. This means we can take advantage of existing styling on the page and do not need to replicate our design system.

This echos what Torres had to say:

Is this a hack? Absolutely. Will you throw away all of the code you write to test this design? I hope so. But if it’s the fastest way to learn what will work, you should do it anyway.

We track customers in a number of ways. Heap, customer tags, and custom Monetate triggers. We look at a) did the customer interact with this feature? b) did they stay with us longer on average than a customer in the control group.

Empowered engineers

My squad makes sure to promote the idea of the empowered engineer.

Marty Cagan writes:

An easy way to tell whether you have empowered engineers or not, is if the first time your engineers see a product idea is at sprint planning, you are clearly a feature team, and your engineers are not empowered in any meaningful sense.

As an engineer, I've found that being brought into the conversation early has had many positive effects. I'm able to suggest functionality that is thought to be too technically challenging by the product manager and product designer. I'm able to warn about aspects which may lead to higher estimations (which we're trying to keep low). More importantly, I'm able to answer my own questions later on when we're building the feature.

Since moving to the continuous discovery model, my squad meets more frequently with members from the data team and customer service team. More time is spent learning the customer's pain. I have less focused coding time but it means that I'm more likely to code the correct thing for the customer the first time around.